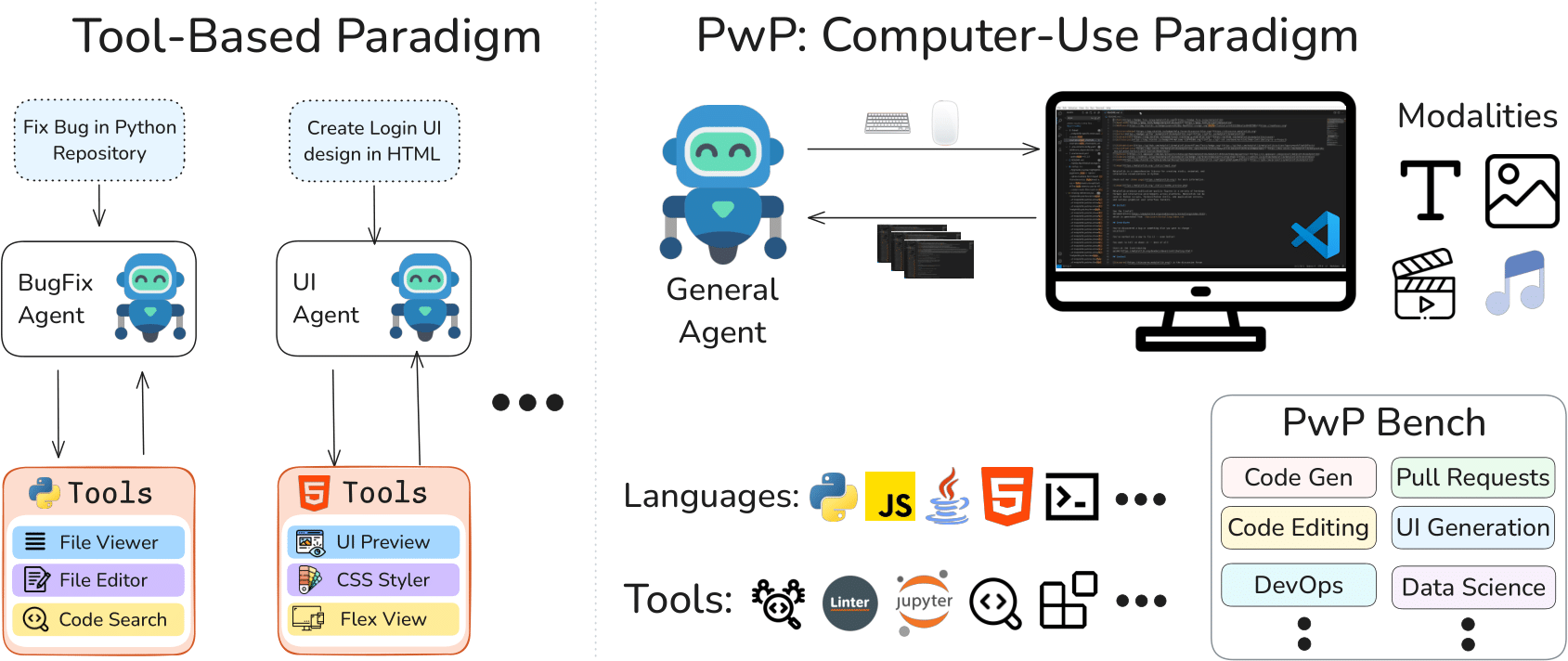

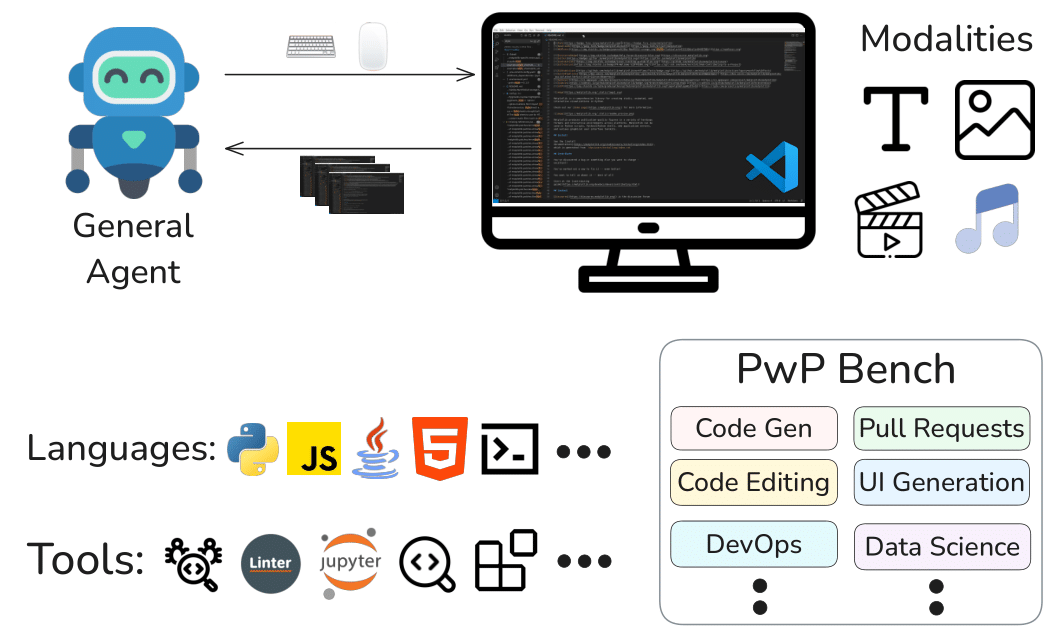

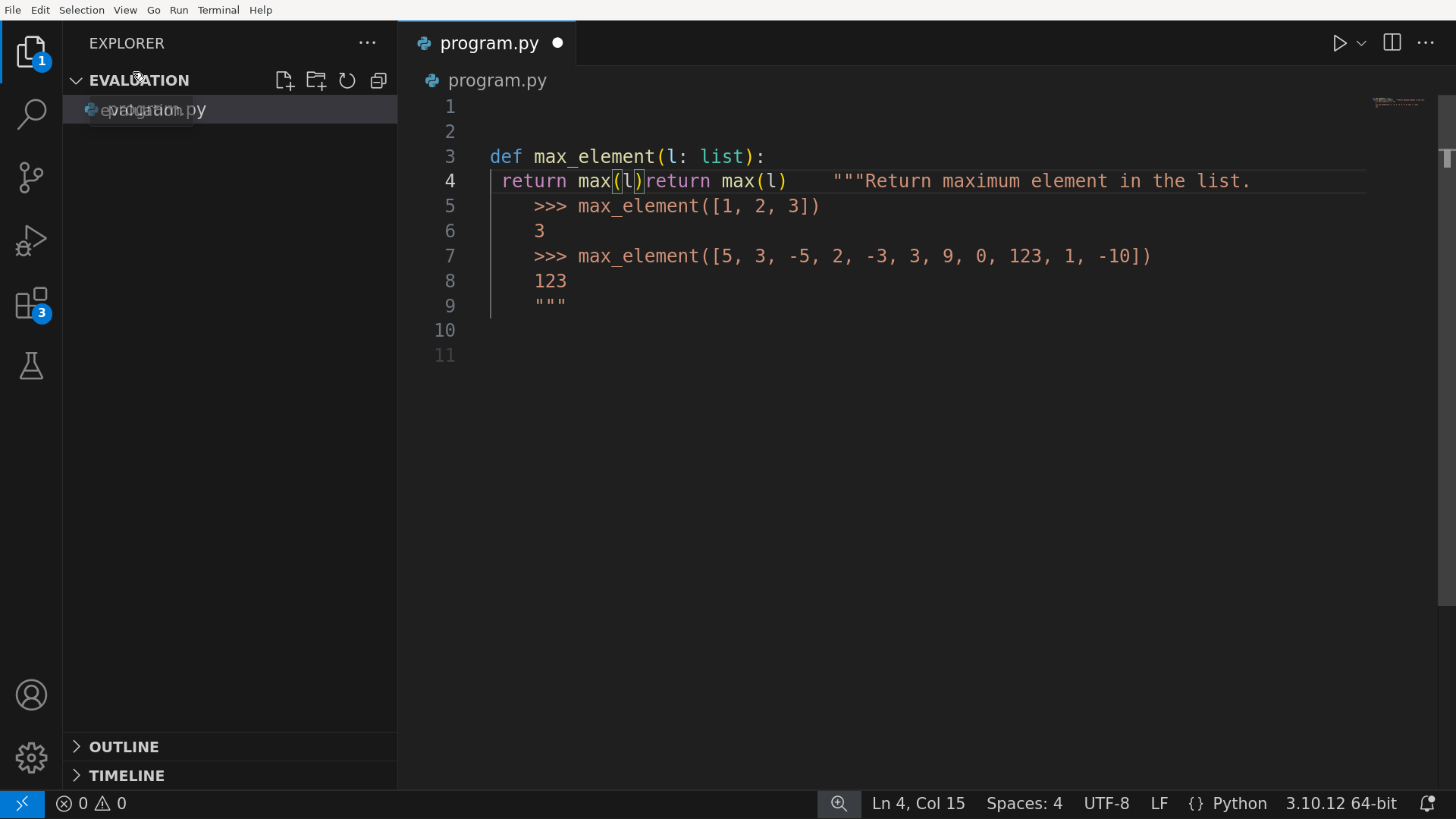

Our motivating hypothesis is that achieving general-purpose Software Engineering (SWE) agents requires a shift to computer-use agents that interact with computers as humans do: by observing the screen, typing, and clicking.

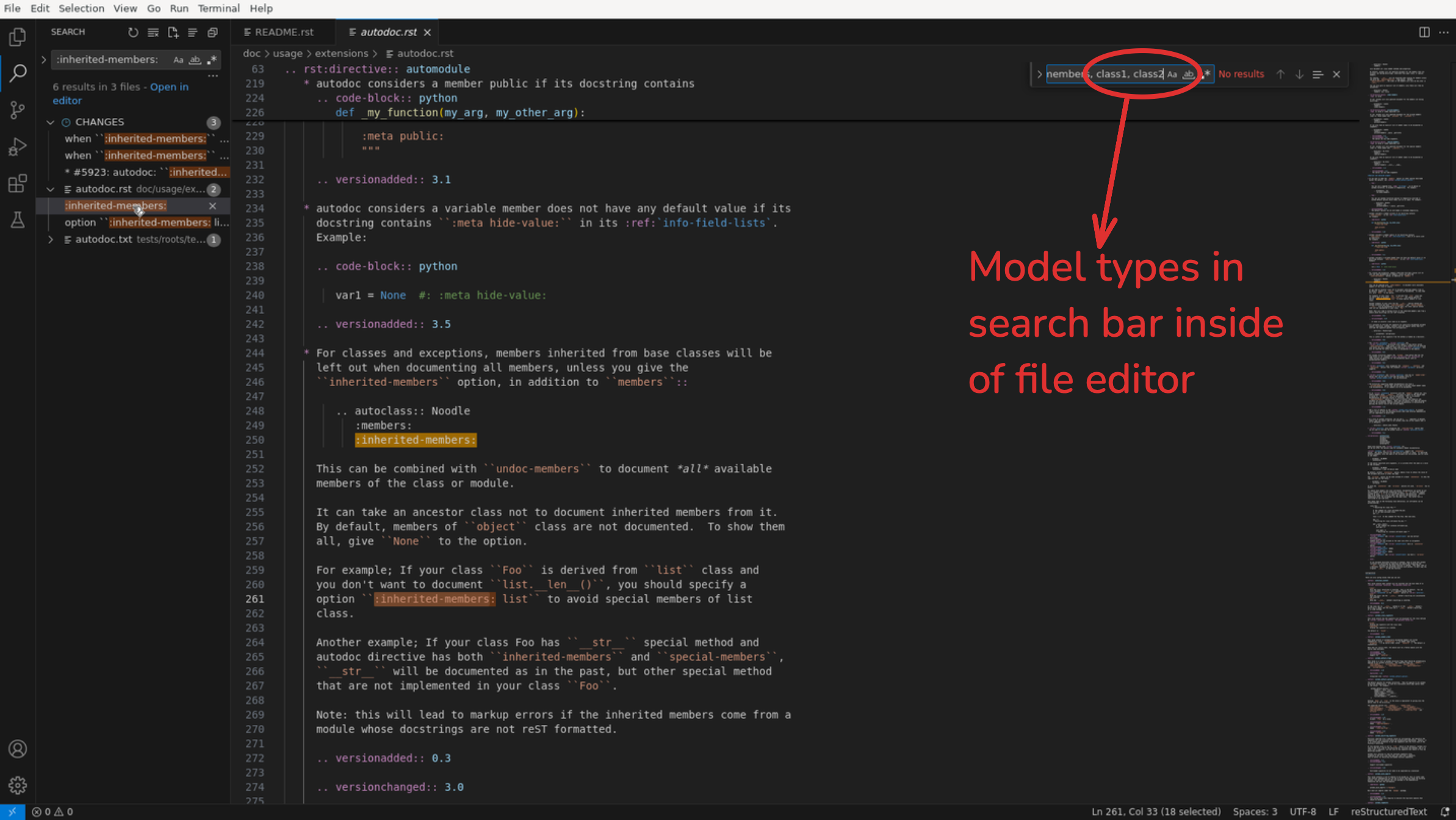

- We recast software engineering as interacting directly with an IDE through visual perception and basic actions

- This allows agents to perform any task possible in an IDE and leverage all tools without requiring specialized APIs

- To achieve this goal, we introduce:

1. Programming with Pixels (PwP): A software engineering agent environment for computer-use agents 🖥️

2. PwP-Bench: A benchmark spanning 15 diverse SWE tasks across 8 programming languages 📊

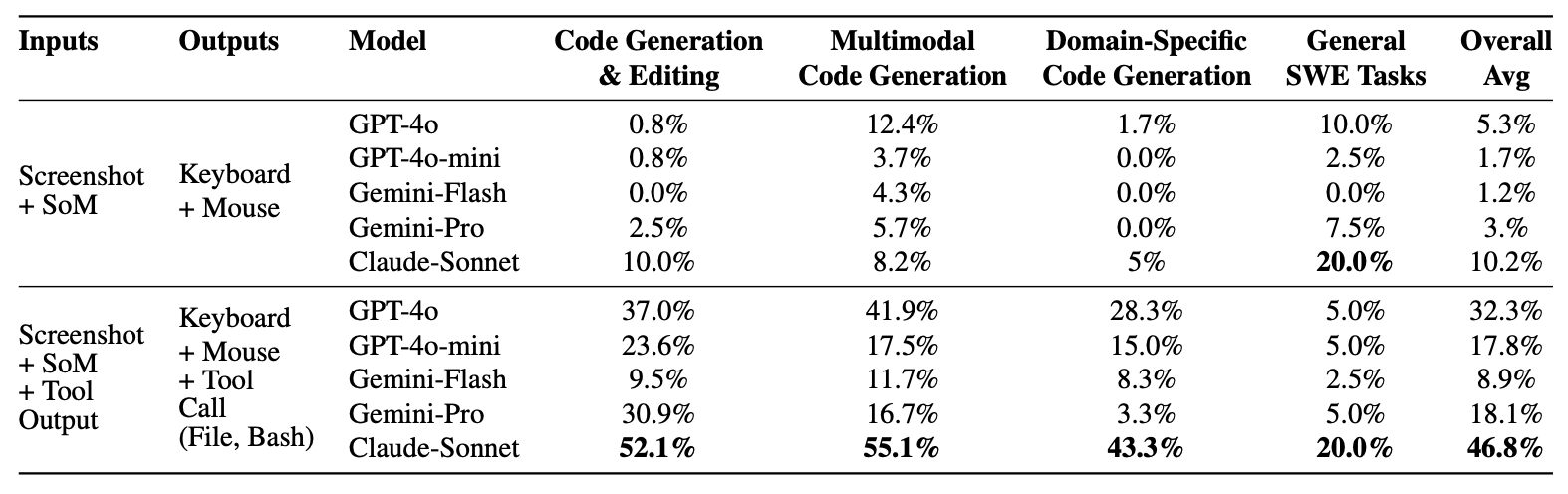

3. Experimental results showing that general-purpose computer-use agents can approach or even surpass specialized tool-based agents 📈